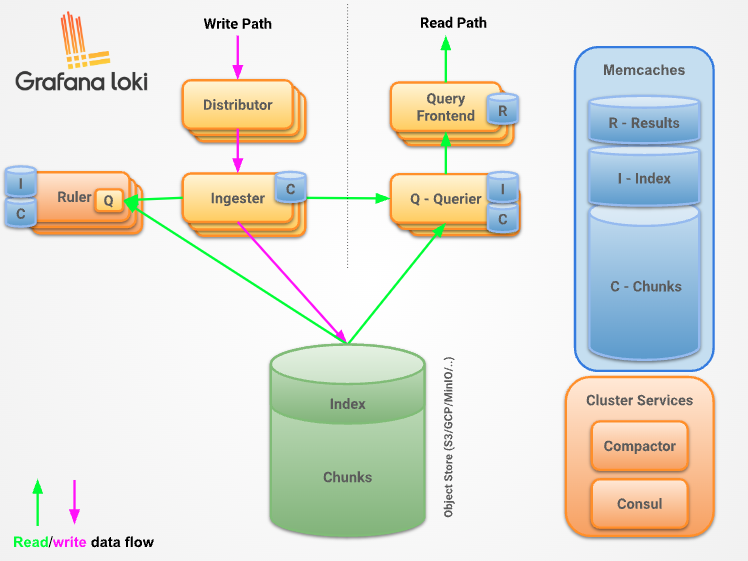

Loki 系统架构

基于 kubernetes

v1.27.0源码分析 scheduler 调度器

k8s scheduler 的主要职责是为新创建的 pod 寻找一个最合适的 node 节点, 然后进行 bind node 绑定, 后面 kubelet 才会监听到并创建真正的 pod.

那么问题来了, 如何为 pod 寻找最合适的 node ? 调度器需要经过 predicates 预选和 priority 优选.

| |

| |

| |

| |

| |

| |

由于线上主机初始化没有安装 socat, 在使用istioctl是无法转发端口,所以直接导出 Envoy 的配置,然后在使用 istioctl操作。

| |

| |

| |

Argo workflow

| |

Argo Events

| |

| |

| |

注意kind 安装的k8s版本大于等于 1.23