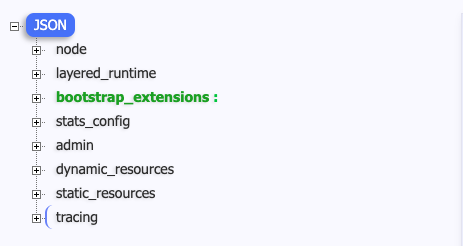

Envoy 初始化配置文件

1

2

kubectl exec -ti productpage-v1-d4f8dfd97-p8xlb -c istio-proxy -- cat /etc/istio/proxy/envoy-rev.json

配置文件结构

Node

包含Envoy所在节点相关信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

id : sidecar~10.86.238.167~productpage-v1-d4f8dfd97-p8xlb.default~default.svc.cluster.local

cluster : productpage.default

locality : {}

metadata :

ANNOTATIONS :

kubectl.kubernetes.io/default-container : productpage

kubectl.kubernetes.io/default-logs-container : productpage

kubernetes.io/config.seen : 2023-03-24T09:42:07.297289187 +08 : 00

kubernetes.io/config.source : api

prometheus.io/path : /stats/prometheus

prometheus.io/port : "15020"

prometheus.io/scrape : "true"

sidecar.istio.io/status : '{"initContainers":["istio-init"],"containers":["istio-proxy"],"volumes":["workload-socket","credential-socket","workload-certs","istio-envoy","istio-data","istio-podinfo","istio-token","istiod-ca-cert"],"imagePullSecrets":null,"revision":"default"}'

APP_CONTAINERS : productpage

CLUSTER_ID : Kubernetes

ENVOY_PROMETHEUS_PORT : 15090

ENVOY_STATUS_PORT : 15021

INSTANCE_IPS : 10.86.238.167

INTERCEPTION_MODE : REDIRECT

ISTIO_PROXY_SHA : 6e6b45cd824e414453ac8f0c81be540269ddff3e

ISTIO_VERSION : 1.17.1

LABELS :

app : productpage

security.istio.io/tlsMode : istio

service.istio.io/canonical-name : productpage

service.istio.io/canonical-revision : v1

version : v1

MESH_ID : cluster.local

NAME : productpage-v1-d4f8dfd97-p8xlb

NAMESPACE : default

NODE_NAME : master

OWNER : kubernetes://apis/apps/v1/namespaces/default/deployments/productpage-v1

PILOT_SAN :

- istiod.istio-system.svc

PLATFORM_METADATA : {}

POD_PORTS : '[{"containerPort":9080,"protocol":"TCP"}]'

PROXY_CONFIG :

binaryPath : /usr/local/bin/envoy

concurrency : 2

configPath : ./etc/istio/proxy

controlPlaneAuthPolicy : MUTUAL_TLS

discoveryAddress : istiod.istio-system.svc:15012

drainDuration : 45s

proxyAdminPort : 15000

serviceCluster : istio-proxy

statNameLength : 189

statusPort : 15020

terminationDrainDuration : 5s

tracing :

zipkin :

address : zipkin.istio-system:9411

SERVICE_ACCOUNT : bookinfo-productpage

WORKLOAD_NAME : productpage-v1

Admin

配置Envoy的日志路径以及管理端口

1

2

3

4

5

6

7

8

9

10

access_log :

- name : envoy.access_loggers.file

typed_config :

"@type": type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog

path : /dev/null

profile_path : /var/lib/istio/data/envoy.prof

address :

socket_address :

address : 127.0.0.1

port_value : 15000

Dynamic_resources

配置动态资源,这里配置了 LDS、CDS 和 ADS 服务配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

lds_config :

ads : {}

initial_fetch_timeout : 0s

resource_api_version : V3

cds_config :

ads : {}

initial_fetch_timeout : 0s

resource_api_version : V3

ads_config :

api_type : GRPC

set_node_on_first_message_only : true

transport_api_version : V3

grpc_services :

- envoy_grpc :

cluster_name : xds-grpc

Static_resources

配置静态资源,包括了prometheus_stats、agent、sds-grpc、xds-grpc 和 zipkin 五个cluster, 15090和15021两个listener。

prometheus_stats cluster和15090 listener用于对外提供兼容prometheus格式的统计指标。 agent sds-grpc cluster 用于证书发现服务(SecretDiscoveryService) xds-grpc cluster对应前面dynamic_resources中ADS配置,指明了Envoy用于获取动态资源的服务器地址 zipkin cluster则是外部的zipkin调用跟踪服务器地址,Envoy会向该地址上报兼容zipkin格式的调用跟踪信息。 1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

clusters :

- name : prometheus_stats

type : STATIC

connect_timeout : 0. 250s

lb_policy : ROUND_ROBIN

load_assignment :

cluster_name : prometheus_stats

endpoints :

- lb_endpoints :

- endpoint :

address :

socket_address :

protocol : TCP

address : 127.0.0.1

port_value : 15000

- name : agent

type : STATIC

connect_timeout : 0. 250s

lb_policy : ROUND_ROBIN

load_assignment :

cluster_name : agent

endpoints :

- lb_endpoints :

- endpoint :

address :

socket_address :

protocol : TCP

address : 127.0.0.1

port_value : 15020

- name : sds-grpc

type : STATIC

typed_extension_protocol_options :

envoy.extensions.upstreams.http.v3.HttpProtocolOptions :

"@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions

explicit_http_config :

http2_protocol_options : {}

connect_timeout : 1s

lb_policy : ROUND_ROBIN

load_assignment :

cluster_name : sds-grpc

endpoints :

- lb_endpoints :

- endpoint :

address :

pipe :

path : ./var/run/secrets/workload-spiffe-uds/socket

- name : xds-grpc

type : STATIC

connect_timeout : 1s

lb_policy : ROUND_ROBIN

load_assignment :

cluster_name : xds-grpc

endpoints :

- lb_endpoints :

- endpoint :

address :

pipe :

path : ./etc/istio/proxy/XDS

circuit_breakers :

thresholds :

- priority : DEFAULT

max_connections : 100000

max_pending_requests : 100000

max_requests : 100000

- priority : HIGH

max_connections : 100000

max_pending_requests : 100000

max_requests : 100000

upstream_connection_options :

tcp_keepalive :

keepalive_time : 300

max_requests_per_connection : 1

typed_extension_protocol_options :

envoy.extensions.upstreams.http.v3.HttpProtocolOptions :

"@type": type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions

explicit_http_config :

http2_protocol_options : {}

- name : zipkin

type : STRICT_DNS

respect_dns_ttl : true

dns_lookup_family : V4_ONLY

dns_refresh_rate : 30s

connect_timeout : 1s

lb_policy : ROUND_ROBIN

load_assignment :

cluster_name : zipkin

endpoints :

- lb_endpoints :

- endpoint :

address :

socket_address :

address : zipkin.istio-system

port_value : 9411

listeners :

- address :

socket_address :

protocol : TCP

address : 0.0.0.0

port_value : 15090

filter_chains :

- filters :

- name : envoy.filters.network.http_connection_manager

typed_config :

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type : AUTO

stat_prefix : stats

route_config :

virtual_hosts :

- name : backend

domains :

- "*"

routes :

- match :

prefix : /stats/prometheus

route :

cluster : prometheus_stats

http_filters :

- name : envoy.filters.http.router

typed_config :

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

- address :

socket_address :

protocol : TCP

address : 0.0.0.0

port_value : 15021

filter_chains :

- filters :

- name : envoy.filters.network.http_connection_manager

typed_config :

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type : AUTO

stat_prefix : agent

route_config :

virtual_hosts :

- name : backend

domains :

- "*"

routes :

- match :

prefix : /healthz/ready

route :

cluster : agent

http_filters :

- name : envoy.filters.http.router

typed_config :

"@type": type.googleapis.com/envoy.extensions.filters.http.router.v3.Router

Tracing

配置分布式链路跟踪,这里配置的后端cluster是前面static_resources里面定义的zipkin cluster。

1

2

3

4

5

6

7

8

9

http :

name : envoy.tracers.zipkin

typed_config :

"@type": type.googleapis.com/envoy.config.trace.v3.ZipkinConfig

collector_cluster : zipkin

collector_endpoint : /api/v2/spans

collector_endpoint_version : HTTP_JSON

trace_id_128bit : true

shared_span_context : false

Envoy 配置分析

从Envoy初始化配置文件中,我们可以大致看到Istio通过Envoy来实现服务发现和流量管理的基本原理。即控制面将xDS server信息通过static resource的方式配置到Envoy的初始化配置文件中,Envoy启动后通过xDS server获取到dynamic resource,包括网格中的service信息及路由规则。

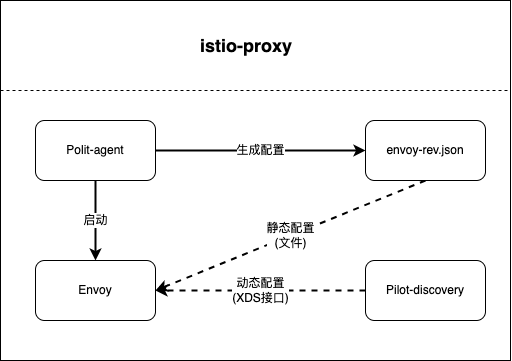

Envoy 配置初始化流程:

Pilot-agent根据启动参数和K8S API Server中的配置信息生成Envoy的初始配置文件envoy-rev.json,该文件告诉Envoy从xDS server中获取动态配置信息,并配置了xDS server的地址信息,即控制面的Pilot。 Pilot-agent使用envoy-rev.json启动Envoy进程。 Envoy根据初始配置获得Pilot地址,采用xDS接口从Pilot获取到Listener,Cluster,Route等d动态配置信息。 Envoy根据获取到的动态配置启动Listener,并根据Listener的配置,结合Route和Cluster对拦截到的流量进行处理 Envoy中实际生效的配置是由初始化配置文件中的静态配置和从Pilot获取的动态配置一起组成的。接下来通过Envoy的管理接口来获取Envoy的完整配置。

1

2

3

# kubectl exec -ti productpage-v1-d4f8dfd97-p8xlb -c istio-proxy -- curl localhost:15000/config_dump> envoy-productpage-v1-d4f8dfd97-p8xlb.json

root@master:~# wc -l envoy-productpage-v1-d4f8dfd97-p8xlb.json

11870 envoy-productpage-v1-d4f8dfd97-p8xlb.json

配置文件内容太长上传到github

envoy-productpage-v1-d4f8dfd97-p8xlb.yaml

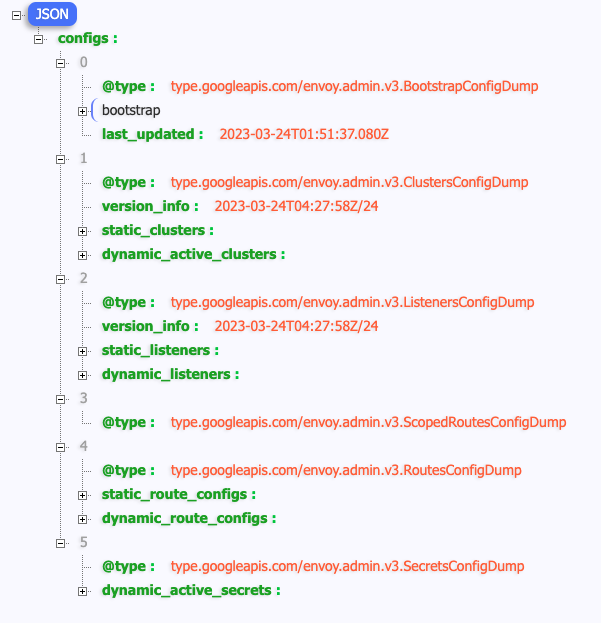

配置结构

Bootstrap

这部分是Envoy的初始化配置,在上面已经介绍就不在赘述。

Clusters

在Envoy中,Cluster是一个服务集群,Cluster中包含一个到多个endpoint,每个endpoint都可以提供服务,Envoy根据负载均衡算法将请求发送到这些endpoint中。

在Productpage的clusters配置中包含static_clusters和dynamic_active_clusters两部分,其中static_clusters是来自于envoy-rev.json的初始化配置中的信息。dynamic_active_clusters是通过xDS接口从Istio控制面获取的动态服务信息。

Dynamic_active_clusters

Dynamic Cluster中有以下几类Cluster

Outbound Cluster Inbound Cluster BlackHoleCluster PassthroughCluster Outbound Cluster

这部分的Cluster占了绝大多数,该类Cluster对应于Envoy所在节点的外部服务。以reviews为例,对于Productpage来说,reviews是一个外部服务,因此其Cluster名称中包含outbound字样

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

# ./istioctl pc c -f envoy-productpage-v1-d4f8dfd97-p8xlb.json --direction outbound

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

details.default.svc.cluster.local 9080 - outbound EDS details.default

details.default.svc.cluster.local 9080 v1 outbound EDS details.default

details.default.svc.cluster.local 9080 v2 outbound EDS details.default

istio-egressgateway.istio-system.svc.cluster.local 80 - outbound EDS

istio-egressgateway.istio-system.svc.cluster.local 443 - outbound EDS

istio-ingressgateway.istio-system.svc.cluster.local 80 - outbound EDS

istio-ingressgateway.istio-system.svc.cluster.local 443 - outbound EDS

istio-ingressgateway.istio-system.svc.cluster.local 15021 - outbound EDS

istio-ingressgateway.istio-system.svc.cluster.local 15443 - outbound EDS

istio-ingressgateway.istio-system.svc.cluster.local 31400 - outbound EDS

istiod.istio-system.svc.cluster.local 443 - outbound EDS

istiod.istio-system.svc.cluster.local 15010 - outbound EDS

istiod.istio-system.svc.cluster.local 15012 - outbound EDS

istiod.istio-system.svc.cluster.local 15014 - outbound EDS

kubernetes.default.svc.cluster.local 443 - outbound EDS

productpage.default.svc.cluster.local 9080 - outbound EDS productpage.default

productpage.default.svc.cluster.local 9080 v1 outbound EDS productpage.default

ratings.default.svc.cluster.local 9080 - outbound EDS ratings.default

ratings.default.svc.cluster.local 9080 v1 outbound EDS ratings.default

ratings.default.svc.cluster.local 9080 v2 outbound EDS ratings.default

ratings.default.svc.cluster.local 9080 v2-mysql outbound EDS ratings.default

ratings.default.svc.cluster.local 9080 v2-mysql-vm outbound EDS ratings.default

reviews.default.svc.cluster.local 9080 - outbound EDS

从reviews 服务对应的cluster配置中可以看到,其类型为EDS,即表示该Cluster的endpoint来自于动态发现,动态发现中eds_config则指向了ads,最终指向static Resource中配置的xds-grpc cluster,即Pilot的地址。

1

./istioctl pc c productpage-v1-d4f8dfd97-p8xlb.default --fqdn reviews.default.svc.cluster.local -o yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

- circuitBreakers :

thresholds :

- maxConnections : 4294967295

maxPendingRequests : 4294967295

maxRequests : 4294967295

maxRetries : 4294967295

trackRemaining : true

commonLbConfig :

localityWeightedLbConfig : {}

connectTimeout : 10s

edsClusterConfig :

edsConfig :

ads : {}

initialFetchTimeout : 0s

resourceApiVersion : V3

serviceName : outbound|9080||reviews.default.svc.cluster.local

filters :

- name : istio.metadata_exchange

typedConfig :

'@type' : type.googleapis.com/envoy.tcp.metadataexchange.config.MetadataExchange

protocol : istio-peer-exchange

lbPolicy : LEAST_REQUEST

metadata :

filterMetadata :

istio :

default_original_port : 9080

services :

- host : reviews.default.svc.cluster.local

name : reviews

namespace : default

name : outbound|9080||reviews.default.svc.cluster.local

transportSocketMatches :

- match :

tlsMode : istio

name : tlsMode-istio

transportSocket :

name : envoy.transport_sockets.tls

typedConfig :

'@type' : type.googleapis.com/envoy.extensions.transport_sockets.tls.v3.UpstreamTlsContext

commonTlsContext :

alpnProtocols :

- istio-peer-exchange

- istio

combinedValidationContext :

defaultValidationContext :

matchSubjectAltNames :

- exact : spiffe://cluster.local/ns/default/sa/bookinfo-reviews

validationContextSdsSecretConfig :

name : ROOTCA

sdsConfig :

apiConfigSource :

apiType : GRPC

grpcServices :

- envoyGrpc :

clusterName : sds-grpc

setNodeOnFirstMessageOnly : true

transportApiVersion : V3

initialFetchTimeout : 0s

resourceApiVersion : V3

tlsCertificateSdsSecretConfigs :

- name : default

sdsConfig :

apiConfigSource :

apiType : GRPC

grpcServices :

- envoyGrpc :

clusterName : sds-grpc

setNodeOnFirstMessageOnly : true

transportApiVersion : V3

initialFetchTimeout : 0s

resourceApiVersion : V3

tlsParams :

tlsMaximumProtocolVersion : TLSv1_3

tlsMinimumProtocolVersion : TLSv1_2

sni : outbound_.9080_._.reviews.default.svc.cluster.local

- match : {}

name : tlsMode-disabled

transportSocket :

name : envoy.transport_sockets.raw_buffer

typedConfig :

'@type' : type.googleapis.com/envoy.extensions.transport_sockets.raw_buffer.v3.RawBuffer

type : EDS

查看cluster对应的endpoints

1

2

3

4

5

6

7

8

9

10

# ./istioctl pc endpoint productpage-v1-d4f8dfd97-p8xlb.default --cluster 'outbound|9080||reviews.default.svc.cluster.local'

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.86.239.128:9080 HEALTHY OK outbound| 9080|| reviews.default.svc.cluster.local

10.86.239.14:9080 HEALTHY OK outbound| 9080|| reviews.default.svc.cluster.local

10.86.239.77:9080 HEALTHY OK outbound| 9080|| reviews.default.svc.cluster.local

root@master:~/istio-1.17.1/bin# kubectl get pod -l app = reviews

NAME READY STATUS RESTARTS AGE

reviews-v1-5896f547f5-cp49b 2/2 Running 0 4d7h

reviews-v2-5d99885bc9-xrltl 2/2 Running 0 4d7h

reviews-v3-589cb4d56c-dknwb 2/2 Running 0 4d7h

Inbound Cluster

该类Cluster对应于Envoy所在节点上的服务。如果该服务接收到请求,当然就是一个入站请求。对于Productpage Pod上的Envoy,其对应的Inbound Cluster只有一个,即productpage。该cluster对应的host为127.0.0.6。

1

2

3

./istioctl pc c -f envoy-productpage-v1-d4f8dfd97-p8xlb.json --direction inbound

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

9080 - inbound ORIGINAL_DST

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

# ./istioctl pc c -f envoy-productpage-v 1 -d 4 f 8 dfd 97 -p 8 xlb.json --direction inbound -o yaml

- circuitBreakers:

thresholds:

- maxConnections: 4294967295

maxPendingRequests: 4294967295

maxRequests: 4294967295

maxRetries: 4294967295

trackRemaining: true

commonLbConfig: {}

connectTimeout: 10 s

lbPolicy: CLUSTER_PROVIDED

metadata:

filterMetadata:

istio:

services:

- host: productpage.default.svc.cluster.local

name: productpage

namespace: default

name: inbound| 9080 ||

type: ORIGINAL_DST

upstreamBindConfig:

sourceAddress:

address: 127.0 . 0.6

portValue: 0

关于 127.0.0.6 IP 地址

127.0.0.6 这个 IP 是 Istio 中默认的 InboundPassthroughClusterIpv4,在 Istio 的代码中指定。即流量在进入 Envoy 代理后被绑定的 IP 地址,作用是让 Outbound 流量重新发送到 Pod 中的应用容器,即 Passthought(透传),绕过 Outbound Handler。该流量是对 Pod 自身的访问,而不是真正的对外流量。至于为什么选择这个 IP 作为流量透传,请参考 Istio Issue-29603

BlackHoleCluster

这是一个特殊的Cluster,并没有配置后端处理请求的Host。如其名字所暗示的一样,请求进入后将被直接丢弃掉。如果一个请求没有找到其对的目的服务,则被发到cluster。

1

2

3

4

5

6

7

8

# ./istioctl pc c -f envoy-productpage-v1-d4f8dfd97-p8xlb.json --fqdn BlackHoleCluster

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

BlackHoleCluster - - - STATIC

# ./istioctl pc c -f envoy-productpage-v1-d4f8dfd97-p8xlb.json --fqdn BlackHoleCluster -o yaml

connectTimeout : 10s

name : BlackHoleCluster

type : STATIC

PassthroughCluster

和BlackHoleCluter相反,发向PassthroughCluster的请求会被直接发送到其请求中要求的原始目地的,Envoy不会对请求进行重新路由。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

# ./istioctl pc c -f envoy-productpage-v1-d4f8dfd97-p8xlb.json --fqdn PassthroughCluster

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

InboundPassthroughClusterIpv4 - - - ORIGINAL_DST

PassthroughCluster - - - ORIGINAL_DST

# ./istioctl pc c -f envoy-productpage-v1-d4f8dfd97-p8xlb.json --fqdn PassthroughCluster -o yaml

circuitBreakers :

thresholds :

- maxConnections : 4294967295

maxPendingRequests : 4294967295

maxRequests : 4294967295

maxRetries : 4294967295

trackRemaining : true

connectTimeout : 10s

lbPolicy : CLUSTER_PROVIDED

name : InboundPassthroughClusterIpv4

type : ORIGINAL_DST

typedExtensionProtocolOptions :

envoy.extensions.upstreams.http.v3.HttpProtocolOptions :

'@type' : type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions

commonHttpProtocolOptions :

idleTimeout : 300s

useDownstreamProtocolConfig :

http2ProtocolOptions : {}

httpProtocolOptions : {}

upstreamBindConfig :

sourceAddress :

address : 127.0.0.6

portValue : 0

circuitBreakers :

thresholds :

- maxConnections : 4294967295

maxPendingRequests : 4294967295

maxRequests : 4294967295

maxRetries : 4294967295

trackRemaining : true

connectTimeout : 10s

filters :

- name : istio.metadata_exchange

typedConfig :

'@type' : type.googleapis.com/envoy.tcp.metadataexchange.config.MetadataExchange

protocol : istio-peer-exchange

lbPolicy : CLUSTER_PROVIDED

name : PassthroughCluster

type : ORIGINAL_DST

typedExtensionProtocolOptions :

envoy.extensions.upstreams.http.v3.HttpProtocolOptions :

'@type' : type.googleapis.com/envoy.extensions.upstreams.http.v3.HttpProtocolOptions

commonHttpProtocolOptions :

idleTimeout : 300s

useDownstreamProtocolConfig :

http2ProtocolOptions : {}

httpProtocolOptions : {}

Listeners

Envoy采用listener来接收并处理downstream发过来的请求,listener采用了插件式的架构,可以通过配置不同的filter在Listener中插入不同的处理逻辑。

Listener可以绑定到IP Socket或者Unix Domain Socket上,以接收来自客户端的请求;也可以不绑定,而是接收从其他listener转发来的数据。Istio利用了Envoy listener的这一特点,通过VirtualOutboundListener在一个端口接收所有出向请求,然后再按照请求的端口分别转发给不同的listener分别处理。

Routers

配置Envoy的路由规则。Istio下发的缺省路由规则中对每个端口设置了一个路由规则,根据host来对请求进行路由分发

Secrets

参考资料

Envoy中文文档

Istio流量管理实现机制深度解析

Istio 中的 Sidecar 注入、透明流量劫持及流量路由过程详解