(

本文基于Istio 1.16.2,k8s 1.24

创建两套k8s集群

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

mkdir -p multicluster

cd multicluster

cat << EOF > kind-cluster1.yaml

kind : Cluster

apiVersion : "kind.x-k8s.io/v1alpha4"

networking :

apiServerAddress : "172.26.128.224"

podSubnet : "10.10.0.0/16"

serviceSubnet : "10.11.0.0/16"

nodes :

- role : control-plane

image : registry.cn-hangzhou.aliyuncs.com/seam/node:v1.24.15

- role : worker

image : registry.cn-hangzhou.aliyuncs.com/seam/node:v1.24.15

kubeadmConfigPatches :

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "topology.kubernetes.io/region=sg,topology.kubernetes.io/zone=az01"

EOF

cat << EOF > kind-cluster2.yaml

kind : Cluster

apiVersion : "kind.x-k8s.io/v1alpha4"

networking :

apiServerAddress : "172.26.128.224"

podSubnet : "10.12.0.0/16"

serviceSubnet : "10.13.0.0/16"

nodes :

- role : control-plane

image : registry.cn-hangzhou.aliyuncs.com/seam/node:v1.24.15

- role : worker

image : registry.cn-hangzhou.aliyuncs.com/seam/node:v1.24.15

kubeadmConfigPatches :

- |

kind: JoinConfiguration

nodeRegistration:

kubeletExtraArgs:

node-labels: "topology.kubernetes.io/region=sg,topology.kubernetes.io/zone=az02"

EOF

1

2

3

4

5

kind create cluster --name cluster1 --kubeconfig= istio-multicluster --config= kind-cluster1.yaml

kind create cluster --name cluster2 --kubeconfig= istio-multicluster --config= kind-cluster2.yaml

kubectl config rename-context kind-cluster1 cluster1 --kubeconfig istio-multicluster

kubectl config rename-context kind-cluster2 cluster2 --kubeconfig istio-multicluster

打通集群网络

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

#!/bin/bash

# add_routes will add routes for given kind cluster

# Parameters:

# - $1: name of the kind cluster want to add routes

# - $2: the kubeconfig path of the cluster wanted to be connected

# - $3: the context in kubeconfig of the cluster wanted to be connected

function add_routes() {

unset IFS

routes = $( kubectl --kubeconfig ${ 2 } --context ${ 3 } get nodes -o jsonpath = '{range .items[*]}ip route add {.spec.podCIDR} via {.status.addresses[?(.type=="InternalIP")].address}{"\n"}{end}' )

echo "Connecting cluster ${ 1 } to ${ 2 } "

IFS = $'\n'

for n in $( kind get nodes --name " ${ 1 } " ) ; do

for r in $routes ; do

echo $r

echo "exec cmd in docker exec $n $r "

eval "docker exec $n $r "

done

done

unset IFS

}

1

2

add_routes cluster1 istio-multicluster cluster2

add_routes cluster2 istio-multicluster cluster1

部署Istio

1

2

3

4

5

6

7

8

cd ../

export KUBECONFIG = ` pwd ` /multicluster/istio-multicluster

export CTX_CLUSTER1 = cluster1

export CTX_CLUSTER2 = cluster2

curl -L https://istio.io/downloadIstio | ISTIO_VERSION = 1.16.2 sh -

cd istio-1.16.2

export PATH = " $PATH :`pwd`/bin"

配置信任关系

生成根证书和密钥

1

2

3

mkdir -p certs

pushd certs

make -f ../tools/certs/Makefile.selfsigned.mk root-ca

root-cert.pem:生成的根证书 root-key.pem:生成的根密钥 root-ca.conf:生成根证书的 openssl 配置 root-cert.csr:为根证书生成的 CSR 每个集群,为 Istio CA 生成一个中间证书和密钥

1

2

make -f ../tools/certs/Makefile.selfsigned.mk cluster1-cacerts

make -f ../tools/certs/Makefile.selfsigned.mk cluster2-cacerts

ca-cert.pem:生成的中间证书 ca-key.pem:生成的中间密钥 cert-chain.pem:istiod 使用的生成的证书链 root-cert.pem:根证书 每个集群中,创建一个私密 cacerts

1

2

3

4

5

6

7

8

9

10

11

12

13

14

kubectl create namespace istio-system --context cluster1

kubectl create secret generic cacerts -n istio-system \

= cluster1/ca-cert.pem \

= cluster1/ca-key.pem \

= cluster1/root-cert.pem \

= cluster1/cert-chain.pem --context cluster1

kubectl create namespace istio-system --context cluster2

kubectl create secret generic cacerts -n istio-system \

= cluster2/ca-cert.pem \

= cluster2/ca-key.pem \

= cluster2/root-cert.pem \

= cluster2/cert-chain.pem --context cluster2

部署Istio

cluster1 设为主集群

1

2

3

4

5

6

7

8

9

10

11

12

13

cat <<EOF > cluster1.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

values:

global:

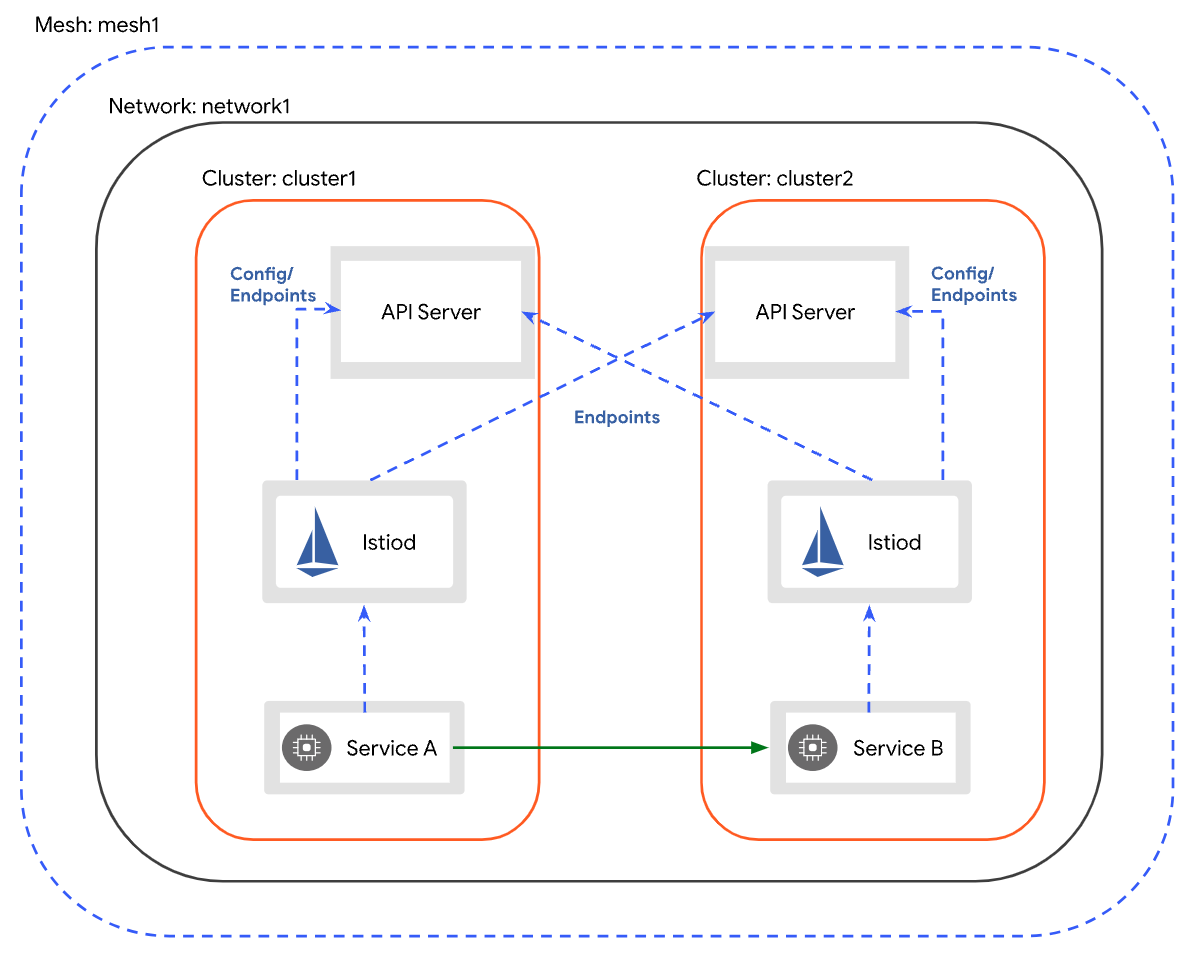

meshID: mesh1

multiCluster:

clusterName: cluster1

network: network1

EOF

istioctl install --context= " ${ CTX_CLUSTER1 } " -f cluster1.yaml

cluster2 设为主集群

1

2

3

4

5

6

7

8

9

10

11

12

13

cat <<EOF > cluster2.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

spec:

values:

global:

meshID: mesh1

multiCluster:

clusterName: cluster2

network: network1

EOF

istioctl install --context="${CTX_CLUSTER2}" -f cluster2.yaml

开启端点发现

创建secret,包含远端集群的 kubeconfig 配置,istio通过 istio/multiCluster=true 确认发现远端集群

1

2

3

4

5

6

7

8

9

10

istioctl x create-remote-secret \

= " ${ CTX_CLUSTER1 } " \

= cluster1 | \

= " ${ CTX_CLUSTER2 } "

istioctl x create-remote-secret \

= " ${ CTX_CLUSTER2 } " \

= cluster2 | \

= " ${ CTX_CLUSTER1 } "

1

2

3

4

5

6

# kubectl get secret -n istio-system istio-remote-secret-cluster2 --show-labels --context cluster1

NAME TYPE DATA AGE LABELS

istio-remote-secret-cluster2 Opaque 1 50s istio/multiCluster= true

# kubectl get secret -n istio-system istio-remote-secret-cluster1 --show-labels --context cluster2

NAME TYPE DATA AGE LABELS

istio-remote-secret-cluster1 Opaque 1 58s istio/multiCluster= true

验证跨集群访问

部署 helloworld 服务

创建名称空间并开启自动注入

1

2

3

4

5

6

7

kubectl create --context= " ${ CTX_CLUSTER1 } " namespace sample

kubectl create --context= " ${ CTX_CLUSTER2 } " namespace sample

kubectl label --context= " ${ CTX_CLUSTER1 } " namespace sample \

= enabled

kubectl label --context= " ${ CTX_CLUSTER2 } " namespace sample \

= enabled

Cluster1 部署V1版本

1

2

3

4

5

kubectl apply --context= " ${ CTX_CLUSTER1 } " \

\

version = v1 -n sample

kubectl get pod --context= " ${ CTX_CLUSTER1 } " -n sample -l app = helloworld

Cluster2 部署V2版本

1

2

3

4

5

kubectl apply --context= " ${ CTX_CLUSTER2 } " \

\

version = v2 -n sample

kubectl get pod --context= " ${ CTX_CLUSTER2 } " -n sample -l app = helloworld

部署 Sleep 服务

每个集群部署

1

2

3

4

kubectl apply --context= " ${ CTX_CLUSTER1 } " \

kubectl apply --context= " ${ CTX_CLUSTER2 } " \

确认服务正常

1

2

kubectl get pod --context= " ${ CTX_CLUSTER1 } " -n sample -l app = sleep

kubectl get pod --context= " ${ CTX_CLUSTER2 } " -n sample -l app = sleep

查看endpoint

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

# istioctl ps

NAME CLUSTER CDS LDS EDS RDS ECDS ISTIOD VERSION

helloworld-v2-79bf565586-jq449.sample cluster2 SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-bf55d77c-6ttql 1.16.2

sleep-69cfb4968f-s5xtl.sample cluster2 SYNCED SYNCED SYNCED SYNCED NOT SENT istiod-bf55d77c-6ttql 1.16.2

# istioctl pc c helloworld-v2-79bf565586-jq449.sample --port 5000

SERVICE FQDN PORT SUBSET DIRECTION TYPE DESTINATION RULE

5000 - inbound ORIGINAL_DST helloworld.sample

helloworld.sample.svc.cluster.local 5000 - outbound EDS helloworld.sample

# istioctl pc endpoint helloworld-v2-79bf565586-jq449.sample --cluster "outbound|5000||helloworld.sample.svc.cluster.local"

ENDPOINT STATUS OUTLIER CHECK CLUSTER

10.10.0.7:5000 HEALTHY OK outbound| 5000|| helloworld.sample.svc.cluster.local

10.12.0.7:5000 HEALTHY OK outbound| 5000|| helloworld.sample.svc.cluster.local

# kubectl get pod -o wide -n sample --context cluster2 -l app=helloworld

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

helloworld-v2-79bf565586-jq449 2/2 Running 0 7h24m 10.12.0.7 cluster2-control-plane <none> <none>

# kubectl get pod -o wide -n sample --context cluster1 -l app=helloworld

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

helloworld-v1-77cb56d4b4-pgzvf 2/2 Running 0 7h24m 10.10.0.7 cluster1-control-plane <none> <none>

1

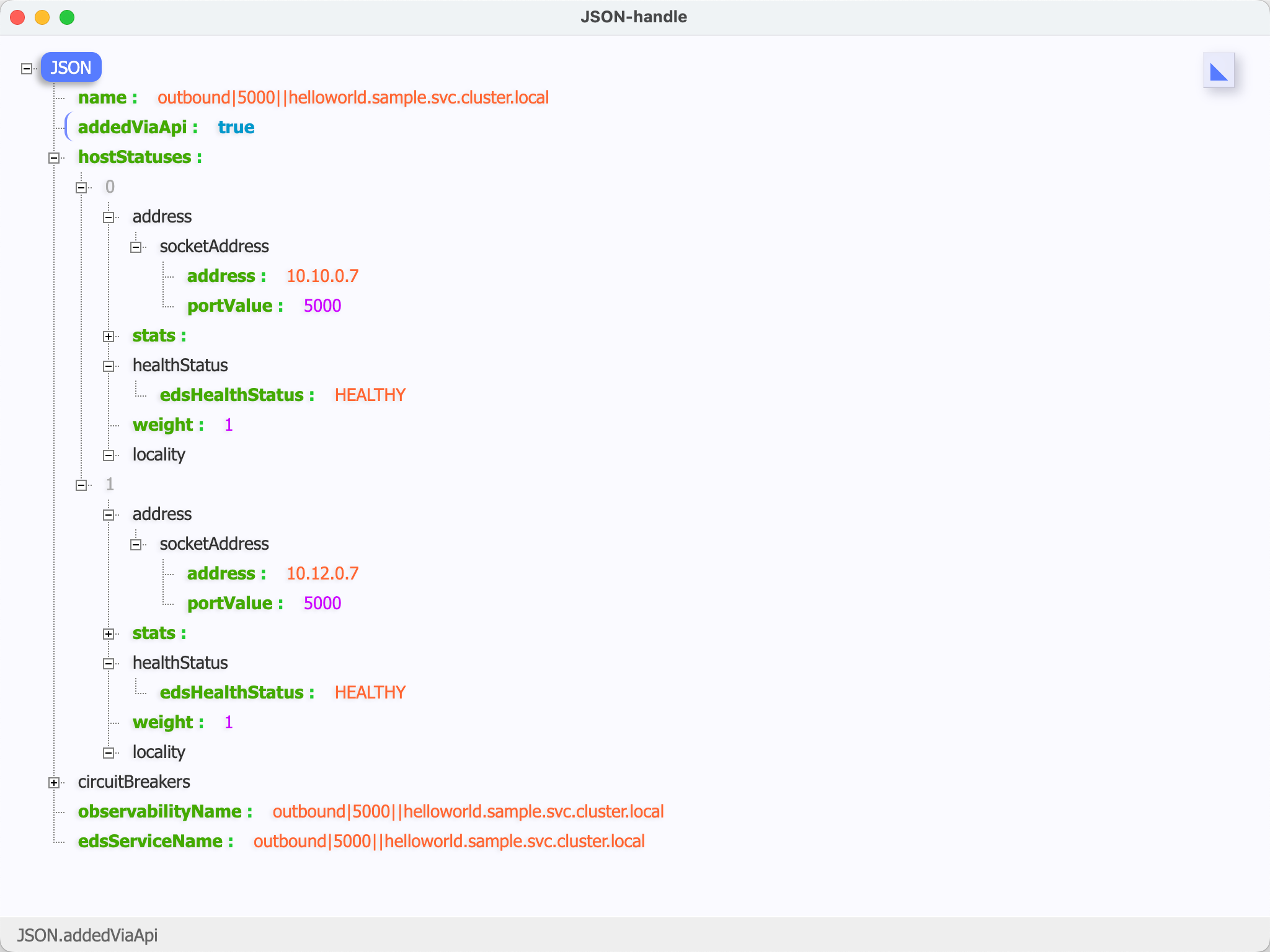

# istioctl pc endpoint helloworld-v 2-79 bf 565586 -jq 449 .sample --cluster "outbound|5000||helloworld.sample.svc.cluster.local" -o json

可以看到两个集群的pod已经注册到了istio

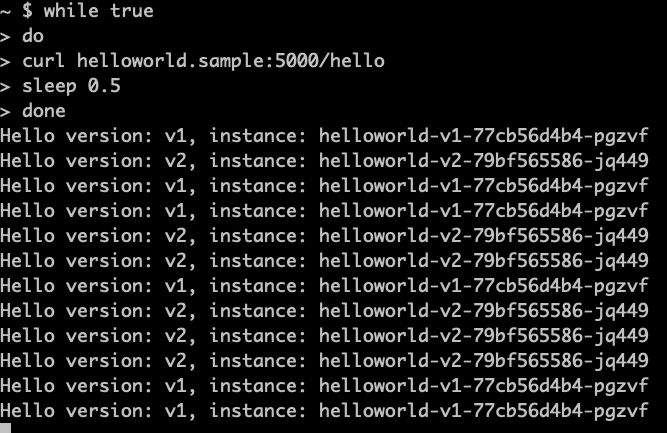

验证跨集群访问

1

2

3

4

kubectl exec --context= " ${ CTX_CLUSTER1 } " -n sample -c sleep \

" $( kubectl get pod --context= " ${ CTX_CLUSTER1 } " -n sample -l \

app = sleep -o jsonpath = '{.items[0].metadata.name}' ) " \

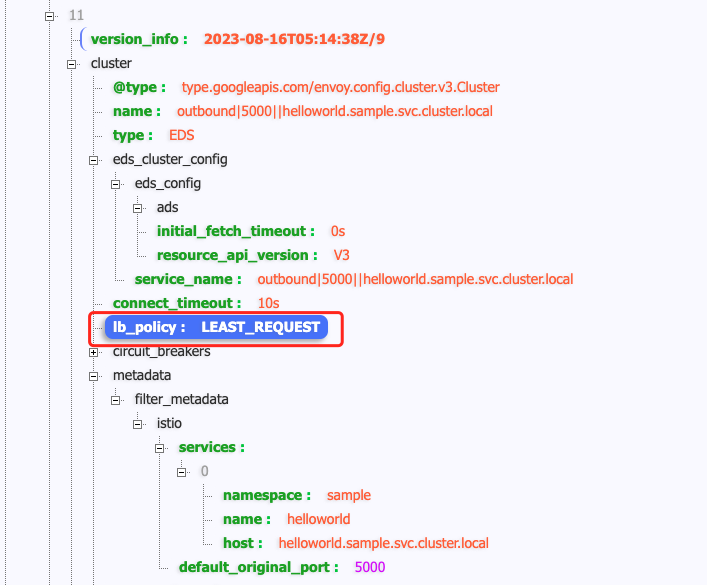

上图看到流量并不像 Envoy 官方文档[4]所说默认策略轮询

查看Envoy负载均衡策略为 LEAST_REQUEST。不知道什么原因Istio 1.16默认负载策略改为LEAST_REQUEST,查看生产环境1.13.4版本默认是没有配置lb_policy,那么就是Envoy默认策略为轮询。

开启Access日志

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

export NAMESPACE=sample

export WORKLOAD=helloworld

cat << EOF | kubectl apply -f - --context="${CTX_CLUSTER1}"

apiVersion : networking.istio.io/v1alpha3

kind : EnvoyFilter

metadata :

name : enable-accesslog

namespace : ${NAMESPACE}

spec :

configPatches :

- applyTo : NETWORK_FILTER

match :

context : ANY

listener :

filterChain :

filter :

name : envoy.filters.network.http_connection_manager

patch :

operation : MERGE

value :

typed_config :

'@type' : type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

access_log :

- name : envoy.access_loggers.file

typed_config :

'@type' : type.googleapis.com/envoy.extensions.access_loggers.file.v3.FileAccessLog

path : /dev/stdout

log_format :

json_format :

authority : "%REQ(:AUTHORITY)%"

bytes_received : "%BYTES_RECEIVED%"

bytes_sent : "%BYTES_SENT%"

downstream_local_address : "%DOWNSTREAM_LOCAL_ADDRESS%"

downstream_remote_address : "%DOWNSTREAM_REMOTE_ADDRESS%"

duration : "%DURATION%"

method : "%REQ(:METHOD)%"

path : "%REQ(X-ENVOY-ORIGINAL-PATH?:PATH)%"

protocol : "%PROTOCOL%"

request_id : "%REQ(X-REQUEST-ID)%"

requested_server_name : "%REQUESTED_SERVER_NAME%"

response_code : "%RESPONSE_CODE%"

response_flags : "%RESPONSE_FLAGS%"

route_name : "%ROUTE_NAME%"

start_time : "%START_TIME%"

upstream_cluster : "%UPSTREAM_CLUSTER%"

upstream_host : "%UPSTREAM_HOST%"

upstream_local_address : "%UPSTREAM_LOCAL_ADDRESS%"

upstream_service_time : "%RESP(X-ENVOY-UPSTREAM-SERVICE-TIME)%"

upstream_transport_failure_reason : "%UPSTREAM_TRANSPORT_FAILURE_REASON%"

user_agent : "%REQ(USER-AGENT)%"

x_forwarded_for : "%REQ(X-FORWARDED-FOR)%"

workloadSelector :

labels :

app : ${WORKLOAD}

EOF

参考资料

https://istio.io/v1.16/zh/docs/setup/install/multicluster/multi-primary/ https://istio.io/v1.16/zh/docs/tasks/security/cert-management/plugin-ca-cert/ https://istio.io/v1.16/zh/docs/setup/install/multicluster/verify/ https://www.envoyproxy.io/docs/envoy/latest/api-v3/config/cluster/v3/cluster.proto.html#envoy-v3-api-enum-config-cluster-v3-cluster-lbpolicy