最近一直在关注 Karmada 社区针对多集群服务治理的发展,在1.7版本之前,社区的方案是通过 Karmada-Controller 组件来实现多集群的服务治理,但是在1.7版本之后,社区提出了 Karmada-Service-Controller 组件,通过 Karmada-Service-Controller 组件可以实现多集群的服务治理,并且在1.7版本之后,社区又将 Karmada-Service-Controller 组件重构,在1.8版本发布第一时间尝试了一下MuliClusterService 服务治理,在此记录一下。

安装Karmada

通过参考karmada快速体验来安装Karmada,或者直接运行 hack/local-up-karmada.sh.

成员集群网络

确保至少有两个集群被添加到 Karmada,并且成员集群之间的容器网络可相互连接。

在 member1 集群部署服务

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

| cat << EOF | kubectl apply -f -

apiVersion: apps/v1

kind: Deployment

metadata:

name: serve

spec:

replicas: 1

selector:

matchLabels:

app: serve

template:

metadata:

labels:

app: serve

spec:

containers:

- name: serve

image: jeremyot/serve:0a40de8

args:

- "--message='hello from cluster member1 (Node: {{env \"NODE_NAME\"}} Pod: {{env \"POD_NAME\"}} Address: {{env \"POD_IP\"}})'"

env:

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_IP

valueFrom:

fieldRef:

fieldPath: status.podIP

---

apiVersion: v1

kind: Service

metadata:

name: serve

spec:

ports:

- port: 80

targetPort: 8080

selector:

app: serve

---

apiVersion: policy.karmada.io/v1alpha1

kind: PropagationPolicy

metadata:

name: mcs-workload

spec:

resourceSelectors:

- apiVersion: apps/v1

kind: Deployment

name: serve

- apiVersion: v1

kind: Service

name: serve

placement:

clusterAffinity:

clusterNames:

- member1

EOF

|

配置MultiClusterService

1

2

3

4

5

6

7

8

9

10

| cat << EOF | kubectl apply -f -

---

apiVersion: networking.karmada.io/v1alpha1

kind: MultiClusterService

metadata:

name: serve

spec:

types:

- CrossCluster

EOF

|

查看服务信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| # kubectl get svc --context member1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.11.0.1 <none> 443/TCP 13m

serve ClusterIP 10.11.106.233 <none> 80/TCP 6m43s

# kubectl get pod --context member1

NAME READY STATUS RESTARTS AGE

serve-6c497897b8-drnt8 1/1 Running 0 6m50s

# kubectl get pod -o wide --context member1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

serve-6c497897b8-drnt8 1/1 Running 0 6m55s 10.10.0.6 member1-control-plane <none> <none>

# kubectl get pod -o wide --context member2

No resources found in default namespace.

# kubectl get svc --context member2

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.13.0.1 <none> 443/TCP 13m

serve ClusterIP 10.13.181.116 <none> 80/TCP 3m9s

|

可以看到 member1 部署 serve , 并且容器IP地址为 10.10.0.6,在 member2 集群没有serve的 Pod ,但是通过MultiClusterService 创建 serve 的 Service.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

| # kubectl get EndpointSlice -l kubernetes.io/service-name=serve --context member3

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

member1-serve-bcfd6 IPv4 8080 10.10.0.6 24m

member2-serve-mvgj7 IPv4 <unset> <unset> 24m

serve-5qzln IPv4 <unset> <unset> 24m

# kubectl get EndpointSlice -l kubernetes.io/service-name=serve --context member2

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

member1-serve-bcfd6 IPv4 8080 10.10.0.6 24m

member3-serve-5qzln IPv4 <unset> <unset> 24m

serve-mvgj7 IPv4 <unset> <unset> 24m

# kubectl get EndpointSlice -l kubernetes.io/service-name=serve --context member1

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

member2-serve-mvgj7 IPv4 <unset> <unset> 24m

member3-serve-5qzln IPv4 <unset> <unset> 24m

serve-bcfd6 IPv4 8080 10.10.0.6 28m

|

通过标签 kubernetes.io/service-name=serve 可以看到每个成员集群都存在自身集群和其他集群对应的 EndpointSlice

在 member2 集群部署客户端

1

2

3

4

5

6

7

8

9

10

11

12

| # kubectl --context member2 run -i --rm --restart=Never --image=jeremyot/request:0a40de8 request -- --duration=10s --address=serve

If you don't see a command prompt, try pressing enter.

2023/12/26 02:11:39 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:40 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:41 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:42 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:43 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:44 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:45 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:46 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

2023/12/26 02:11:47 'hello from cluster member1 (Node: member1-control-plane Pod: serve-6c497897b8-drnt8 Address: 10.10.0.6)'

pod "request" deleted

|

可以看到member2集群的客户端访问返回了member1的节点和PodIP地址,说明跨集群服务调用成功.

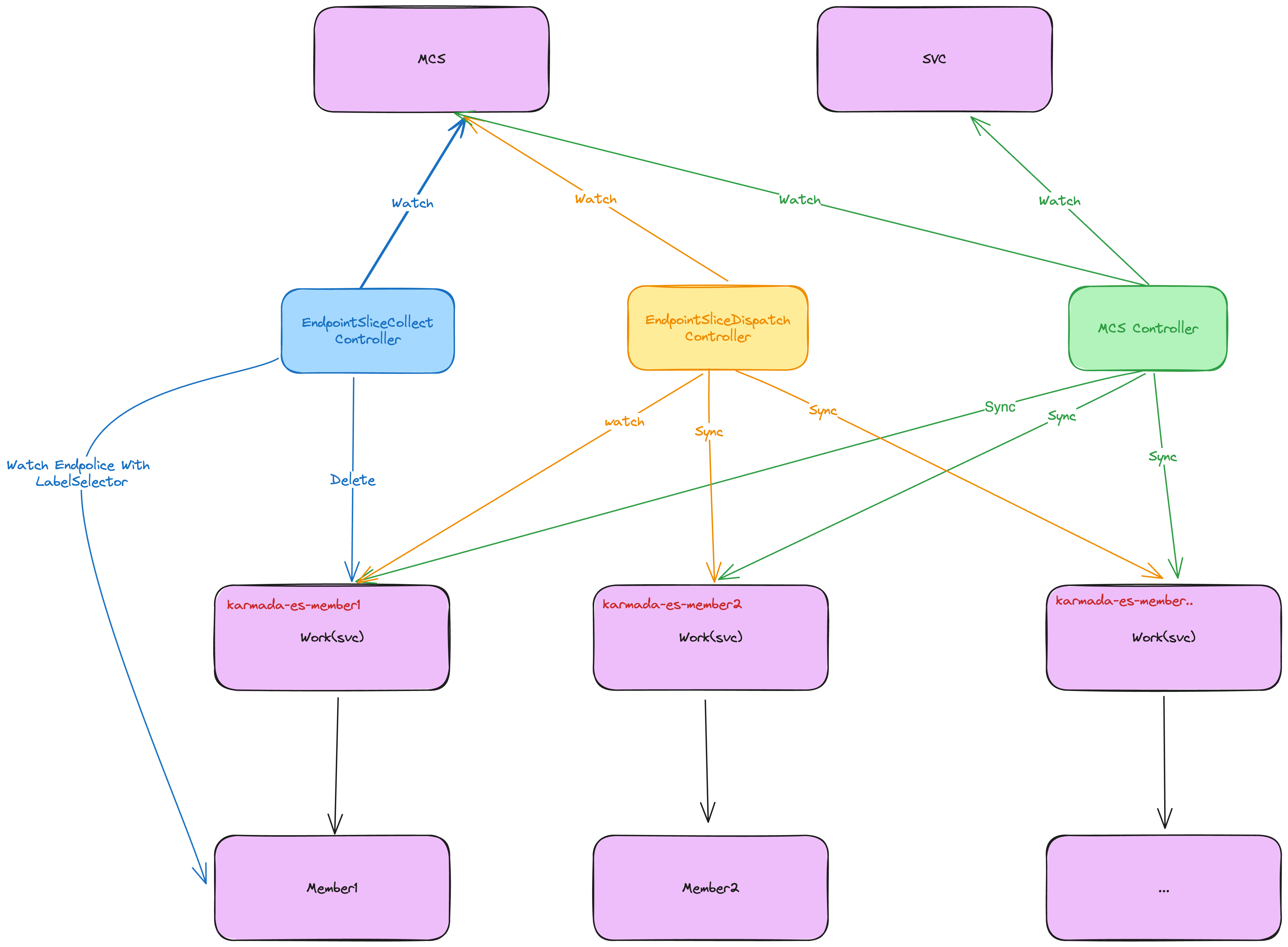

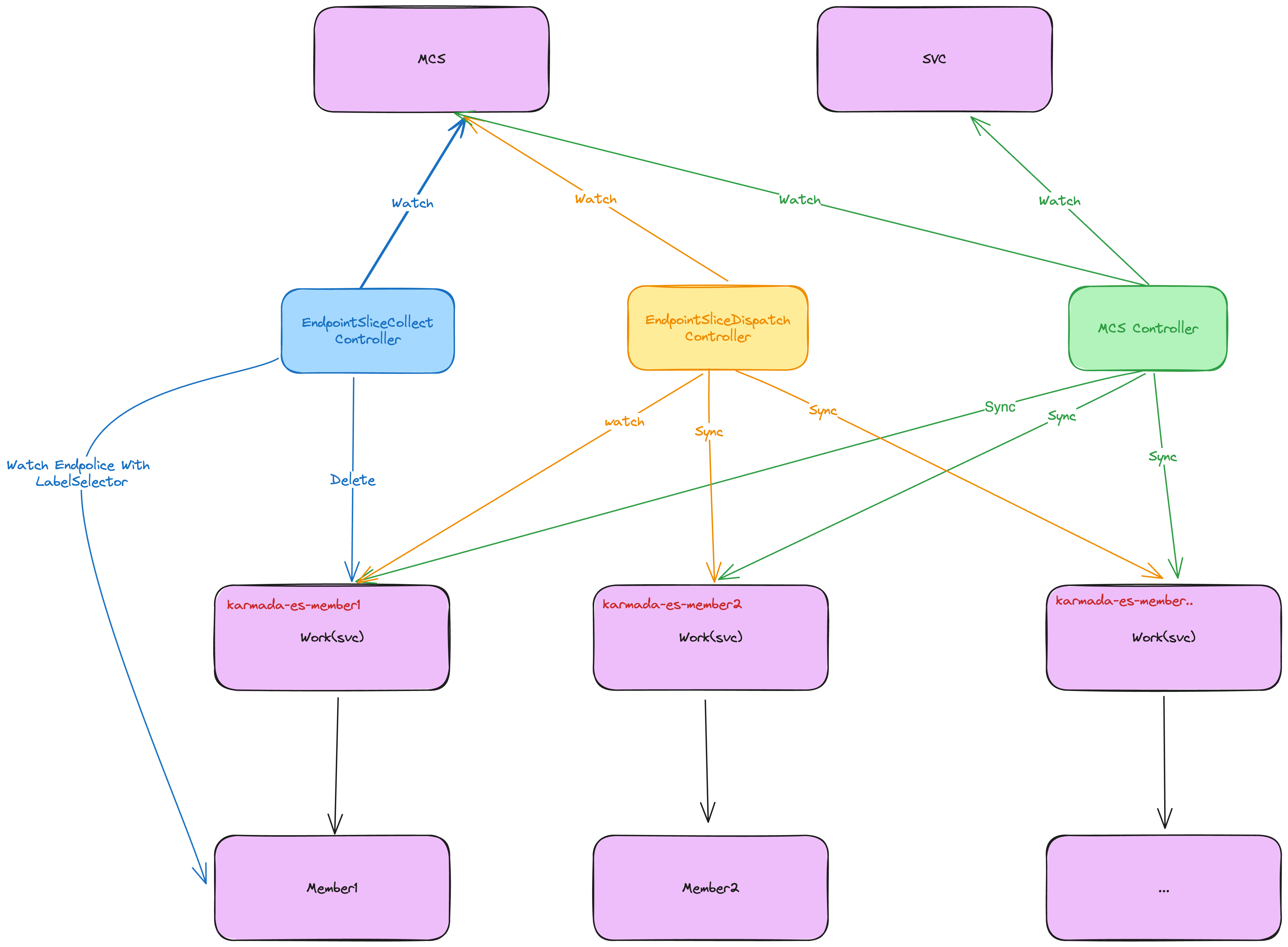

MCS Controller 控制器同步原理

MCS Controller 控制器将 列出和监视 Karmada 控制平面的MultiClusterService 和 Service资源;- 一旦有相同名称的

MultiClusterService 和 Service,MCS Controller 在 spec.serviceProvisionClusters 和 spec.serviceConsumptionClusters 定义的目标集群名称空间下创建 Work; - 将

Work 与 Service 关联,并将 Work 调度到 spec.serviceProvisionClusters 定义的目标集群中; endpointsliceCollect 控制器将列出和监视 Karmada 控制平面的 MultiClusterService 资源;endpointsliceCollect 控制器通过 informer 收集 spec.serviceProvisionClusters 目标集群列出和监视目标服务的EndpointSlice;endpointsliceCollect 控制器将在集群命名空间中为每个 EndpointSlice 创建相应的 Work;

补充内容

EndpointSlice 如何与 Service 关联

在大多数场合下,EndpointSlice 都由某个 Service 所有, (因为)该端点切片正是为该服务跟踪记录其端点。这一属主关系是通过为每个 EndpointSlice 设置一个属主(owner)引用,同时设置 kubernetes.io/service-name 标签来标明的, 目的是方便查找隶属于某 Service 的所有 EndpointSlice

流量分发概览

当前环境信息

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

| # kubectl get pod -o wide --context member1

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

serve-668b66f786-gdqrq 1/1 Running 0 10m 10.10.0.6 member1-control-plane <none> <none>

# kubectl get pod -o wide --context member2

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

serve-668b66f786-85wnq 1/1 Running 0 8m54s 10.12.0.6 member2-control-plane <none> <none>

# kubectl get svc --context member1

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.11.0.1 <none> 443/TCP 16m

serve ClusterIP 10.11.225.36 <none> 80/TCP 10m

# kubectl get svc --context member2

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.13.0.1 <none> 443/TCP 16m

serve ClusterIP 10.13.181.116 <none> 80/TCP 10m

# kubectl get EndpointSlice -l kubernetes.io/service-name=serve --context member2

NAME ADDRESSTYPE PORTS ENDPOINTS AGE

member1-serve-9cxbg IPv4 8080 10.10.0.6 24h

member3-serve-7j5dl IPv4 <unset> <unset> 24h

serve-dgpm5 IPv4 8080 10.12.0.6 24h

|

member2 发起请求测试

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

| # kubectl --context member2 run -i --rm --restart=Never --image=jeremyot/request:0a40de8 request -- --duration=30s --address=serve

If you don't see a command prompt, try pressing enter.

2023/12/27 06:07:26 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:27 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:28 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:29 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:30 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:31 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:32 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:33 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:34 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:35 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:36 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:37 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:38 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:39 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:40 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:41 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:42 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:43 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:44 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:45 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:46 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:47 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:48 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:49 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:50 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:51 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:52 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

2023/12/27 06:07:53 'hello from cluster member1 (Node: member2-control-plane Pod: serve-668b66f786-85wnq Address: 10.12.0.6)'

2023/12/27 06:07:54 'hello from cluster member1 (Node: member1-control-plane Pod: serve-668b66f786-gdqrq Address: 10.10.0.6)'

pod "request" deleted

|

总共29个请求,其中member1接收到15个请求,member2接收到14个请求。

iptables模式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

| # iptables -t nat -nL

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

KUBE-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */

...

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

KUBE-SERVICES all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes service portals */

...

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

KUBE-POSTROUTING all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes postrouting rules */

...

# 这个规则主要用来对经过的报文打标签,打上标签的报文可能会做相应处理

# 在KUBE-POSTROUTING链中对NODE节点上匹配kubernetes独有MARK标记的数据包,当报文离开node节点时进行SNAT,MASQUERADE源IP

Chain KUBE-MARK-MASQ (16 references)

target prot opt source destination

MARK all -- 0.0.0.0/0 0.0.0.0/0 MARK or 0x4000

...

#

Chain KUBE-POSTROUTING (1 references)

target prot opt source destination

RETURN all -- 0.0.0.0/0 0.0.0.0/0 mark match ! 0x4000/0x4000

MARK all -- 0.0.0.0/0 0.0.0.0/0 MARK xor 0x4000

MASQUERADE all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes service traffic requiring SNAT */ random-fully

# KUBE-SEP表示的是KUBE-SVC对应的endpoint,当接收到的 serviceInfo中包含endpoint信息时,为endpoint创建跳转规则

Chain KUBE-SEP-IGFYH4Q532RZE4G2 (1 references)

target prot opt source destination

KUBE-MARK-MASQ all -- 10.10.0.6 0.0.0.0/0 /* default/serve */

DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 /* default/serve */ tcp to:10.10.0.6:8080

Chain KUBE-SEP-N5FCR2JKVEID5ZPW (1 references)

target prot opt source destination

KUBE-MARK-MASQ all -- 10.12.0.6 0.0.0.0/0 /* default/serve */

DNAT tcp -- 0.0.0.0/0 0.0.0.0/0 /* default/serve */ tcp to:10.12.0.6:8080

Chain KUBE-SERVICES (2 references)

target prot opt source destination

...

# 将 `KUBE-SERVICES` 链中写入 `default/serve` 服务对应的"KUBE-SVC-ICRL3RCP3N62CZN4"链

KUBE-SVC-ICRL3RCP3N62CZN4 tcp -- 0.0.0.0/0 10.13.181.116 /* default/serve cluster IP */ tcp dpt:80

...

# 为 `default/serve` 服务创建"KUBE-SVC-ICRL3RCP3N62CZN4"链,如果 `Endpoint` 未就绪,"KUBE-SVC-ICRL3RCP3N62CZN4"链下规则为空

Chain KUBE-SVC-ICRL3RCP3N62CZN4 (1 references)

target prot opt source destination

KUBE-MARK-MASQ tcp -- !10.12.0.0/16 10.13.181.116 /* default/serve cluster IP */ tcp dpt:80

KUBE-SEP-IGFYH4Q532RZE4G2 all -- 0.0.0.0/0 0.0.0.0/0 /* default/serve -> 10.10.0.6:8080 */ statistic mode random probability 0.50000000000

KUBE-SEP-N5FCR2JKVEID5ZPW all -- 0.0.0.0/0 0.0.0.0/0 /* default/serve -> 10.12.0.6:8080 */

...

|

Kubernetes规则比较多,删减了其他内容,只保留相关规则

从上面的规则可以看到,KUBE-SERVICES –> KUBE-SVC-ICRL3RCP3N62CZN4 链中有两个KUBE-SEP 链,KUBE-SEP-IGFYH4Q532RZE4G2 和 KUBE-SEP-N5FCR2JKVEID5ZPW分别对应member1 和 member2 节点上的serve 服务对应的 Endpoint。

ipvs模式

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

| # iptables -t filter -nL

Chain INPUT (policy ACCEPT)

target prot opt source destination

KUBE-IPVS-FILTER all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes ipvs access filter */

KUBE-PROXY-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0 /* kube-proxy firewall rules */

KUBE-NODE-PORT all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes health check rules */

KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0

Chain FORWARD (policy ACCEPT)

target prot opt source destination

KUBE-PROXY-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0 /* kube-proxy firewall rules */

KUBE-FORWARD all -- 0.0.0.0/0 0.0.0.0/0 /* kubernetes forwarding rules */

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

KUBE-FIREWALL all -- 0.0.0.0/0 0.0.0.0/0

...

Chain KUBE-IPVS-FILTER (1 references)

target prot opt source destination

RETURN all -- 0.0.0.0/0 0.0.0.0/0 match-set KUBE-LOAD-BALANCER dst,dst

RETURN all -- 0.0.0.0/0 0.0.0.0/0 match-set KUBE-CLUSTER-IP dst,dst

RETURN all -- 0.0.0.0/0 0.0.0.0/0 match-set KUBE-EXTERNAL-IP dst,dst

RETURN all -- 0.0.0.0/0 0.0.0.0/0 match-set KUBE-HEALTH-CHECK-NODE-PORT dst

REJECT all -- 0.0.0.0/0 0.0.0.0/0 ctstate NEW match-set KUBE-IPVS-IPS dst reject-with icmp-port-unreachable

...

# ipvsadm -Ln -t 10.13.181.116:80

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.13.181.116:80 rr

-> 10.10.0.6:8080 Masq 1 0 15

-> 10.12.0.6:8080 Masq 1 0 15

|

总结

相比较与1.7版本之前的方案,1.8版本之后新的方案在跨集群服务治理操作更加简单,只需要创建MultiClusterService就可以快速实现跨集群服务治理。Karmada社区效率真的高,文档也非常完善,值得学习。

参考资料

- https://github.com/karmada-io/karmada/tree/master/docs/proposals/service-discovery

- https://www.lijiaocn.com/%E9%A1%B9%E7%9B%AE/2017/03/27/Kubernetes-kube-proxy.html

- https://kubernetes.io/docs/concepts/services-networking/endpoint-slices/#ownership