1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

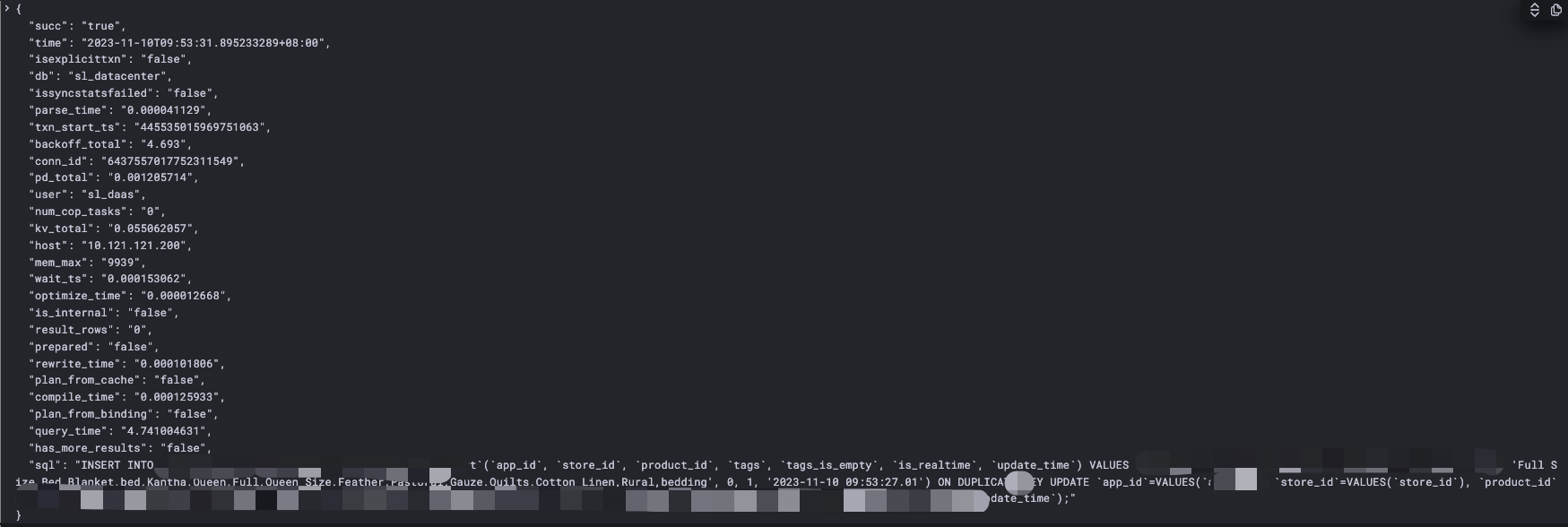

| cat << EOF > /data/services/fluent-bit/conf/filters.lua

function extract_string(tag, timestamp, record)

local log = record["log"]

local new_record = {}

local base_pattern = ":%s"

local str_arr ={

"Time",

"DB",

"User",

"Host",

"Index_names",

"Preproc_subqueries",

"Is_internal",

"Prepared",

"Plan_from_cache",

"Plan_from_binding",

"Has_more_results",

"Succ",

"Prev_stmt",

"IsExplicitTxn",

"IsWriteCacheTable",

"IsSyncStatsFailed",

"sql"

}

for i = 1, #str_arr do

local pattern = string.format("%s%s%s", str_arr[i], base_pattern, "(%w+)")

if str_arr[i] == "Time" then

pattern = "Time:%s(%d+-%d+-%d+T%d+:%d+:%d+.%d+%+%d+:%d+)"

elseif str_arr[i] == "DB" then

pattern = "DB:%s(%w+%p+%w+)"

elseif str_arr[i] == "sql" then

pattern = "#%sIsSyncStatsFailed:%s.*\n(.*;)"

elseif str_arr[i] == "Host" then

pattern = "#%sUser@Host:.*@%s(%d+%.%d+%.%d+%.%d+)"

elseif str_arr[i] == "User" then

pattern = "#%sUser@Host:.*%[(.*)%]%s@"

end

local field = string.match(log, pattern)

if field then

new_record[string.lower(str_arr[i])] = field

end

end

local int_arr = {

"Txn_start_ts",

"Conn_ID",

"Num_cop_tasks",

"Mem_max",

"Disk_max",

"Result_rows",

"Exec_retry_count",

}

for i = 1, #int_arr do

local pattern = string.format("%s%s%s", int_arr[i], base_pattern, "(%d+)")

local field = string.match(log, pattern)

if field then

new_record[string.lower(int_arr[i])] = field

end

end

local addr_arr = {

"Cop_proc_addr",

"Cop_wait_addr",

}

for i = 1, #addr_arr do

local pattern = string.format("%s%s%s", addr_arr[i], base_pattern, "%d+%.%d+%.%d+%.%d+")

local field = string.match(log, pattern)

if field then

new_record[string.lower(addr_arr[i])] = field

end

end

local folat_arr = {

"Query_time",

"Cop_time",

"Parse_time",

"Compile_time",

"Rewrite_time",

"Optimize_time",

"Wait_TS",

"Preproc_subqueries_time",

"Cop_proc_avg",

"Cop_proc_p90",

"Cop_proc_max",

"Cop_wait_avg",

"Cop_wait_p90",

"Cop_wait_max",

"Exec_retry_time",

"KV_total",

"PD_total",

"Backoff_total",

"Write_sql_response_total",

"Backoff_Detail",

}

for i = 1, #folat_arr do

local pattern = string.format("%s%s%s", folat_arr[i], base_pattern, "(%d+.%d+)")

local field = string.match(log, pattern)

if field then

new_record[string.lower(folat_arr[i])] = field

end

end

-- new_record["message"] = record["log"]

return 1, timestamp, new_record

end

EOF

|